![[Univ of Cambridge]](graphics/uniban-s.gif)  ![[Dept of Engineering]](graphics/engban-s.gif) |

| project home page |

|

CamSeq01 Dataset, Cambridge Labeled Objects in Video, |

|

CamSeq01 is a

groundtruth dataset that can be freely used for research work in object

recognition in video. This database is unique since it is a video

sequence and consists of high resolution images. It includes the

original frame sequence and the corresponding labeled frames which

constitute the groundtruth. In the labeled frames, each object has been

painted with a given class colour by human operators. Applications of

this dataset include: object recognition, object label propagation,

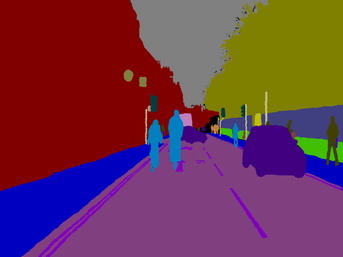

object tracking ... This dataset has been originally designed for the problem of automated driving vehicle. This sequence depicts a moving driving scene in the city of Cambridge filmed from a moving car. It is a challenging dataset since, in addition to the car ego-motion, other cars, bicyles and pedestrians have their own motion and they often occlude one another. Note: more ground truth datasets similar to this one are currently (as of October 2007) being prepared and will be released soon. Dataset description It consists of 101 960x720 pixel images in which each pixel was manually assigned to one of the following 32 object classes that are relevant in a driving environment:  list of class labels and corresponding colours The "void" label indicates an area which ambiguous or irrelevant in this context. The colour/class association is given in the file label_colors.txt. Each line has the R G B values (between 0 and 255) and then the class name. Example of original and labeled frames:

Image format and naming All images (original and groundtruth) are in uncompressed 24-bit color PNG format. For each frame from the original sequence, its corresponding labeled frame bears the same name, with an extra "_L" before the ".png" extension. For example, the first frame is "0016E5_07959.png" and the corresponding labeled frame is "0016E5_07959_L.png". Capture protocol We drove in the streets of Cambridge UK, with a camera mounted on the passenger seat in a car. A high definition Panasonic HVX200 digital camera was used, capturing 960 × 720 pixel frames at 30fps. We filmed about 2 hours. The CaTLOV dataset is a sub-sequence of 101 consecutives frames subsampled every other frames (from 202 frames). It corresponds to 6 seconds of continuous driving.  camera setup in the car Hand labeling for building the groundtruth We hired people to produce manually the labeled maps for each of the 101 frames. They painted the areas corresponding to a predefined list of 32 objects of interest given a specific palette of colors. They have used a program that we developed for this task which offers various automatic segmentations along with floodfilling and manual painting capabilities. We corrected the labeled sequence to make sure that no object was left out and that the “labeling style” (which varies depending on the person) was consistent across the sequence. By logging and timing each stroke, we were able to estimate the hand labeling time for one frame to be around 20-25 minutes (this duration can vary greatly depending on the complexity of the scene). Citation If you intend to use this database, please cite the following paper:

Download Dataset: CamSeq01.zip (92Mb) It contains the 101 original frames + the 101 corresponding groundtruth frames + the colour/class file label_colors.txt. Other visual recognition datasets Video: Still images:

Note: Release of the CamToy database: we are in the process of releasing the CamToy database, which has been captured and labeled following the same protocol as for CamSeq01. It consists of longer video sequences (original and labeled). In the mean time, you can watch a preview video of the database. Acknowledgement This work has been carried out with the support of Toyota Motor Europe. Contact Julien Fauqueur (  ) ) Gabriel Brostow (  )

) Roberto Cipolla (  )

) |

|

|