Main > GMT_4YP_23_1

Dr Graham Treece, Department of Engineering

F-GMT11-1: Design of colour and material maps in volume rendering

|

|

| Volume rendering is potentially a very powerful visualisation tool for medical imaging and for micro-CT scans of other objects |

But it is reliant on defining the colours and various material properties carefully and being able to adjust them easily. What is the best set of colours and parameters to use in each case, can this be designed automatically, or perhaps copied from a photo? |

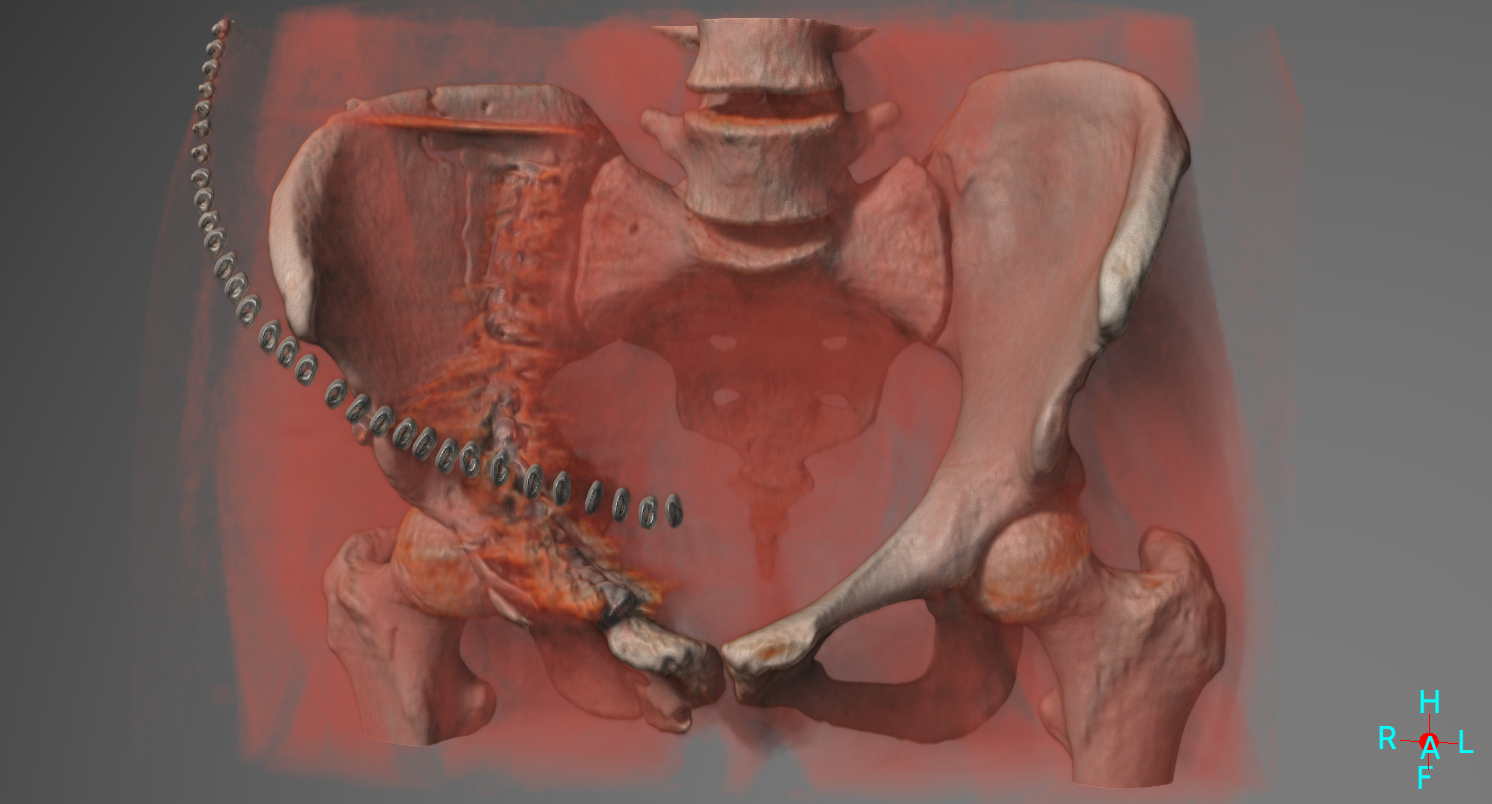

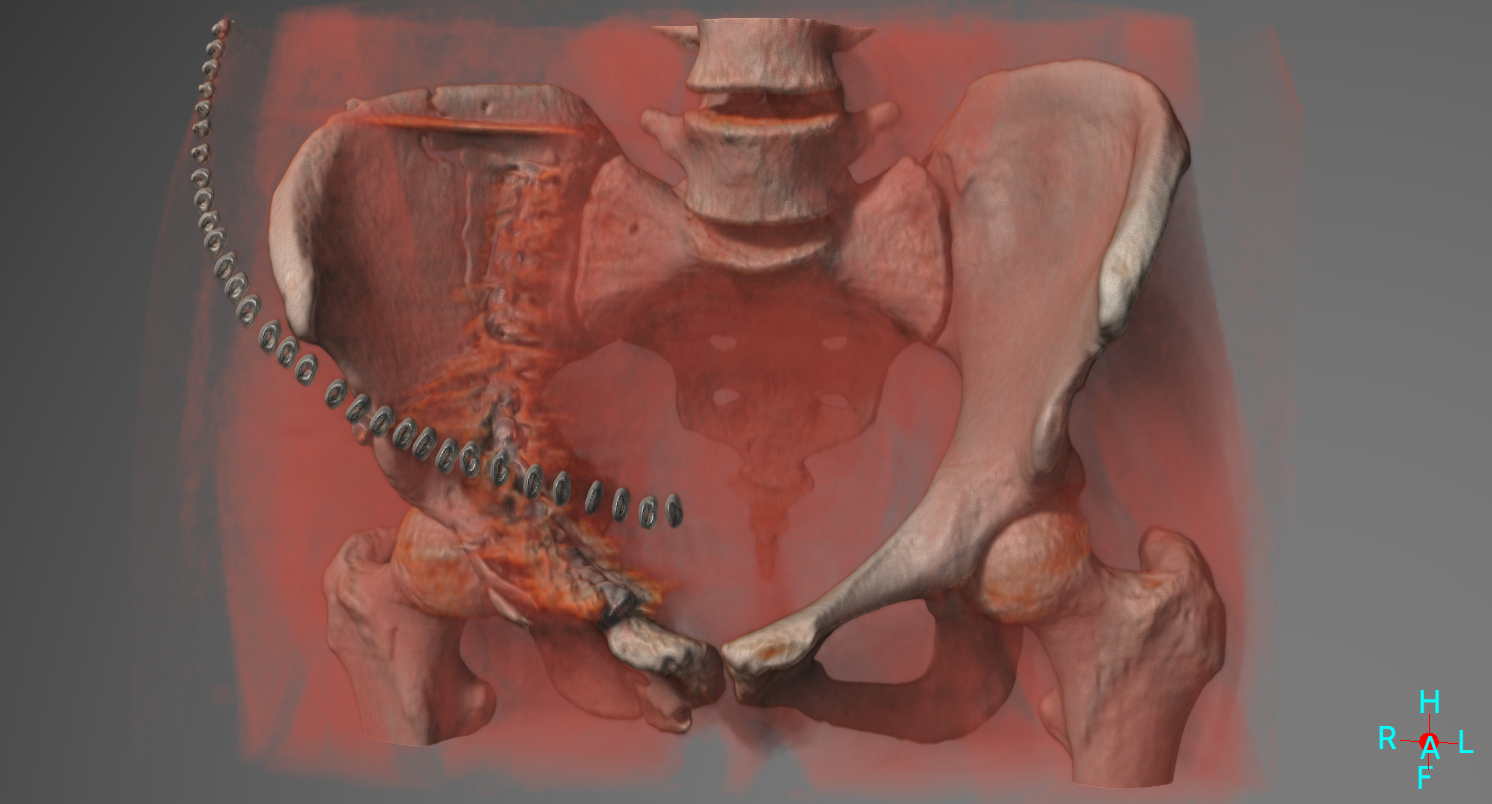

Volume rendering is a way of directly visualising 3D tomographic data which is much easier to understand and investigate than viewing the original cross-sectional images. The technique has been around for a while but both the quality and rendering speed have improved considerably in the last decade, which means it can now be used even on large data sets (e.g. micro-CT) with fairly standard computers.

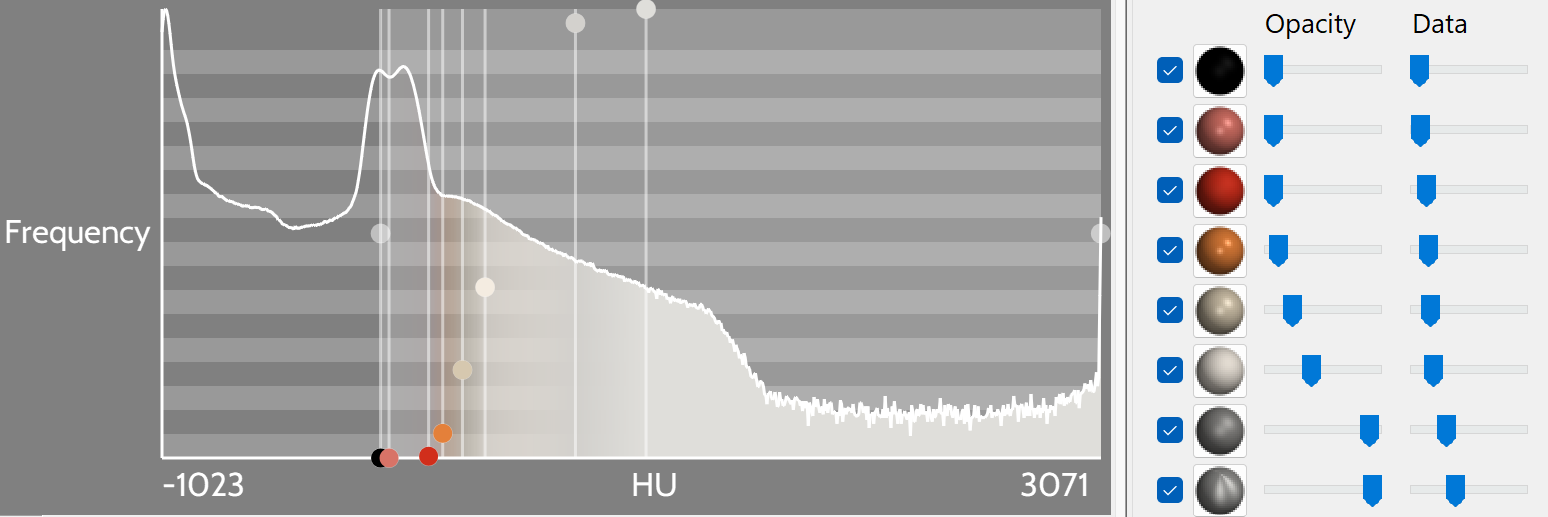

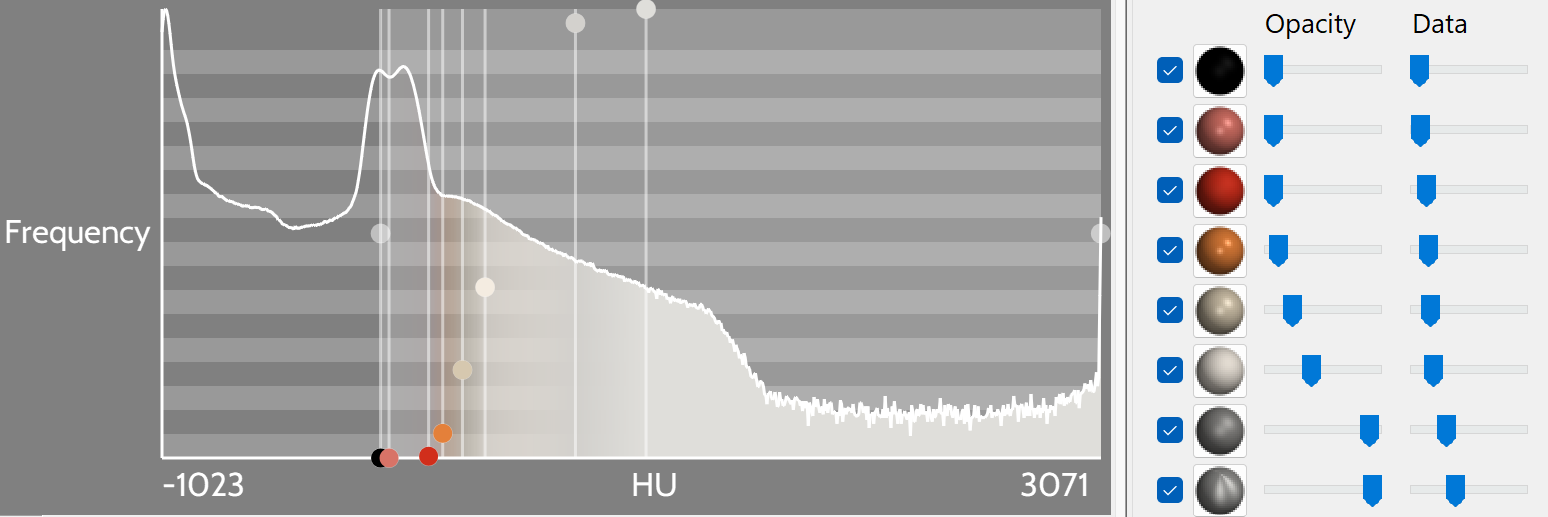

The process involves designing colour maps (or transfer functions) which relate the original image values (for instance density for CT data) to colour, opacity and potentially other material parameters such as roughness. Many different sorts of transfer functions have been proposed, which also make use of data gradients or curvatures as well as data values. However, the limit to all of these is often the complexity of defining the actual colour mapping. Standard transfer functions are usually provided which 'work' for certain scenarios. Editing these would nearly always produce a better result, even if the scenario fits, but this is often a very counter-intuitive task.

So to what extent can these mappings be automatically designed for each specific data set? There are several interesting avenues to pursue here. For instance, there is a lot of work on colour spaces and how we perceive colour: what are the best combinations of colours, and how should they be combined, to achieve the best possible contrast in a volume rendering, and hence allow you to see as many individual features as possible? Or alternatively, if it is desired to match a volume rendering to a real object, can the set of colours and material parameters be automatically extracted from, say, a photo, and applied to the data so that the rendering closely matches reality?

This is an algorithmic development / software project, so experience of writing software is essential. C++ and GLSL would be helpful, though the development could be done using another programming environment, and the project could be used as a way to learn a shading language such as GLSL.

Click here for other medical imaging projects offered by Graham Treece.