Main > GMT_4YP_23_2

Dr Graham Treece, Department of Engineering

F-GMT11-2: Adjustment for prior smoothing in medical image analysis

|

|

|

|

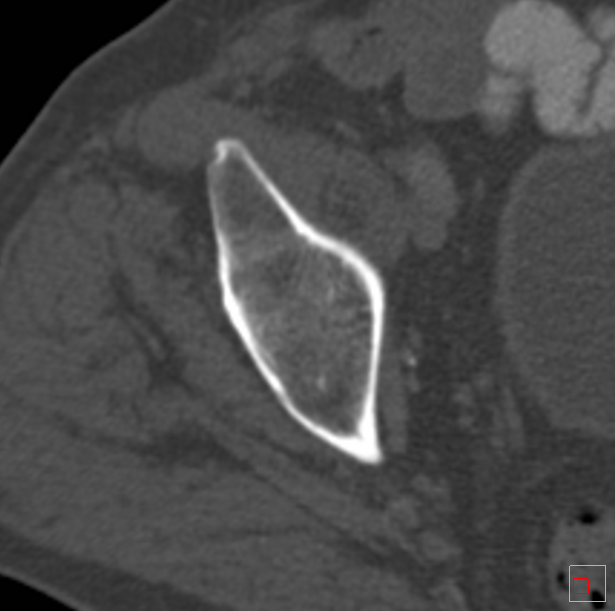

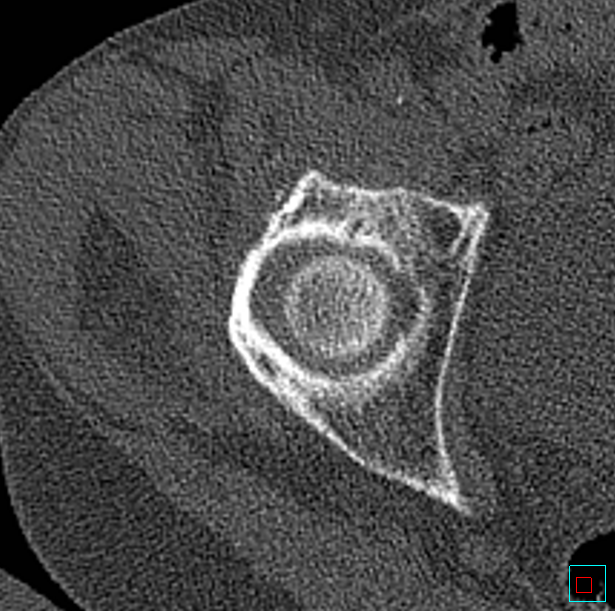

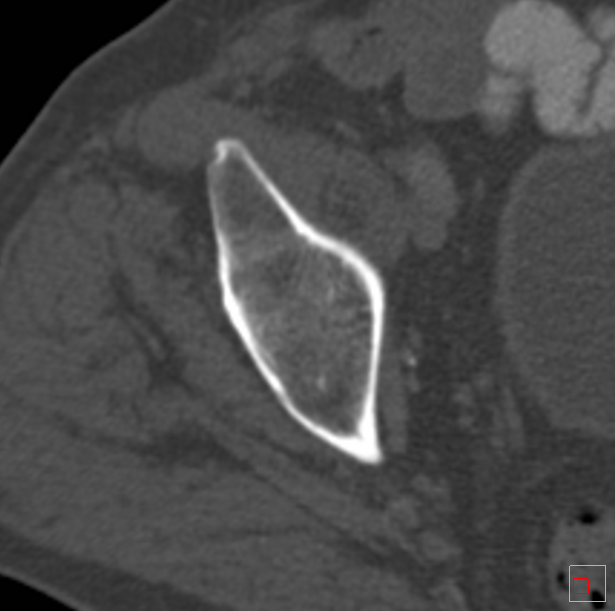

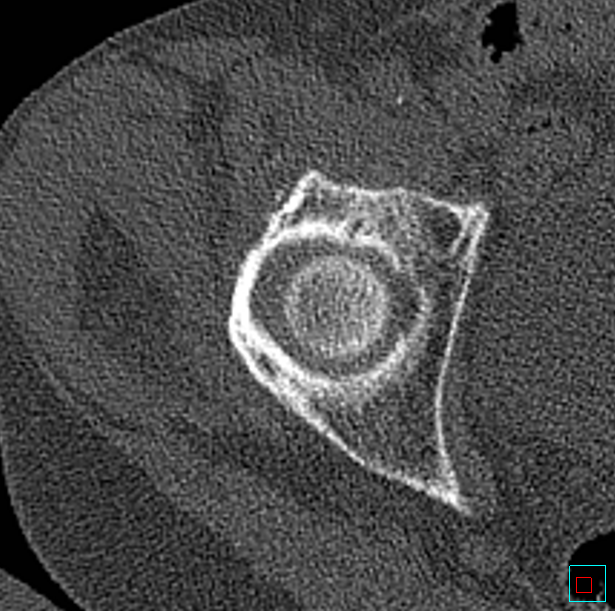

| Medical data is often reconstructed in different ways, for instance this CT uses a 'kernel' designed for soft tissue |

This causes different smoothing and noise levels. Here the bone kernel actually sharpens the data |

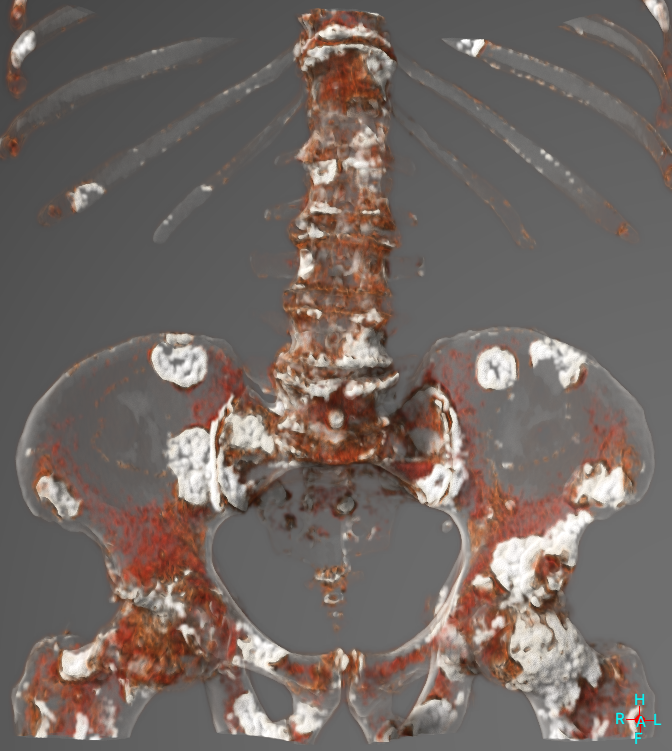

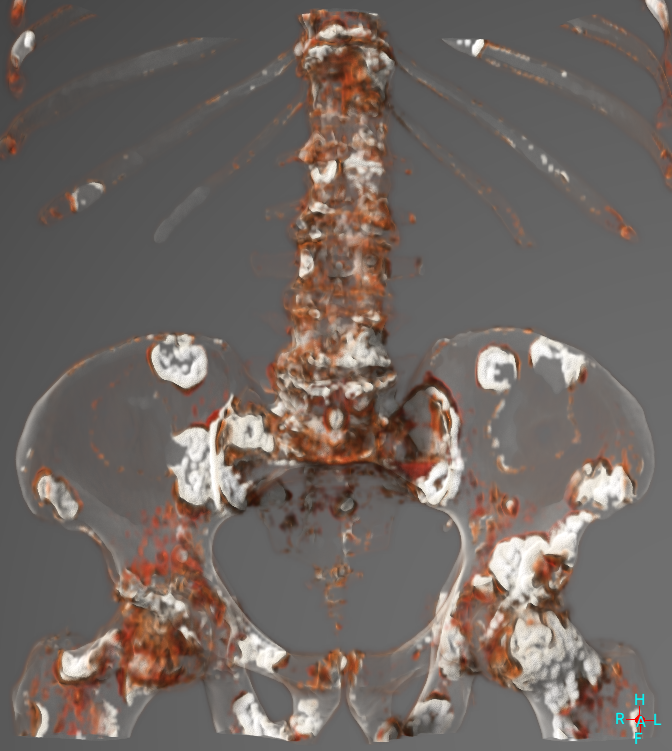

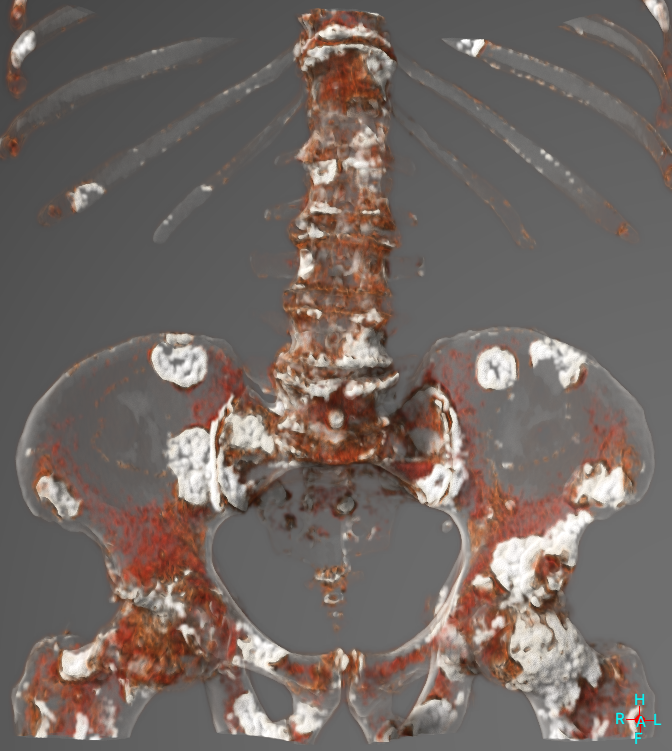

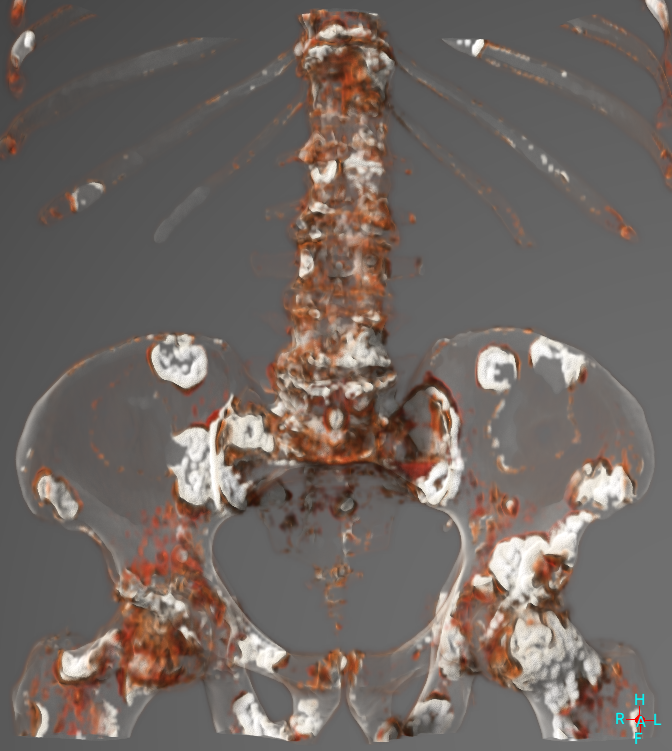

But different levels of smoothing affect later processing of the data, for instance visualisation of metastases |

Automatically enforcing a particular smoothing level would ensure processing was optimal / reliable |

Medical image data always has some level of smoothing. This can either be the result of finite resolution due to the physical measurement process, or smoothing or sharpening deliberately applied during the reconstruction process. For CT data, this is by using a particular reconstruction 'kernel', which will vary between CT scans and also scanner manufacturers. These kernels are only described by name (for instance 'bone' or 'soft tissue', or 'B30f') and the clinician will generally have little knowledge of how this impacts the data, other than using the appropriate one depending on what they want to look at. Hence there is no specific record in the data of how much smoothing has actually been applied.

But various data analysis techniques, for instance segmentation of data, or measurement of cortical properties, or visualisation of the data, are affected by the amount of smoothing in the data. In particular, modern algorithms which can de-convolve (de-blur) data tend to work better when there is a reasonable degree of smoothing already, and are adversely affected by sharpening of data. So it would be ideal to specifically set the total amount of smoothing in a data set before applying these techniques.

Hence this project concerns three closely related questions. To what extent do different levels of smoothing affect the results of typical analysis techniques? Can the existing amount of smoothing (and possibly also the residual noise levels) be reliably (and quickly) estimated from typical medical data sets? And hence can additional smoothing be automatically applied to data to ensure that the analysis techniques are optimally applied without the user needing to concern themselves with this?

This is an algorithmic development / software project, so experience of writing software is essential, though the development could be done using any appropriate programming environment.

Click here for other medical imaging projects offered by Graham Treece.